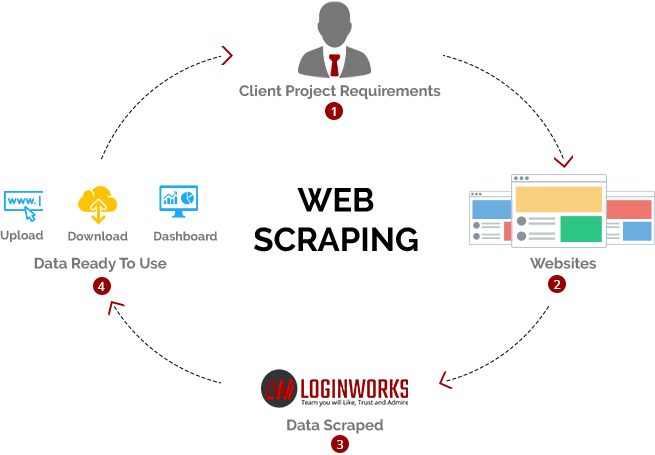

With a data vendor that closes store and goes away or terminates service, you could be left in the lurch. Datahut is an outstanding web scraping solution, equally as excellent as any kind of you will certainly obtain. You will obtain what is called a Service-level Arrangement which is a type of legal assurance that makes certain the prompt distribution of information without endangering the quality.

It is either custom-built for a. certain website or is one, which can be configured, based upon a collection of parameters, to collaborate with any type of website. With the click of a button, you can quickly conserve the information readily available on an internet site to a file on your computer system. The Web hosts possibly the greatest resource of details on earth. Several techniques, such as information scientific research, service intelligence, as well as investigative reporting, can profit tremendously from accumulating and also assessing data from web sites. Scratch eCommerce sites to draw out product prices, schedule, testimonials, importance, brand name track record and even more.

Binance to End The original source Debit Cards for Users in Latam and Middle East - PYMNTS.com

Binance to End Debit Cards for Users in Latam and Middle East.

Posted: Thu, 24 Aug 2023 13:11:40 GMT [source]

This conserves time and money, making internet scraping an important device in lots of markets. There are many software application devices offered that can be used to customize web-scraping remedies. Some web scuffing software application can also be used to remove information from an API straight.

Social Media Evaluation

The common formats in which the data is being removed through an internet scrape are JSON, CSV, XML, or just a basic spread sheet. When you're coding your internet scrape, it's important to be as specific as feasible about what you want to gather. Maintain points too unclear and you'll end up with far way too much information (and a frustration!) It's best to spend a long time in advance to create a clear plan. This will certainly conserve you lots View website of effort cleansing your information over time. This is where the scraper requests site accessibility, extracts the data, and also analyzes it. Nevertheless, it ought to be noted that web scratching also has a dark underbelly.

- Our software application has the modern technology to scale https://zenwriting.net/sklodoeocy/envision-your-information-from-thousands-of-data-resources-through-extremely to your company requirements.

- For example, you may utilize internet scraping to draw website web content and also product details from an ecommerce site right into a format that's less complicated to utilize.

- It's good to be knowledgeable about these risks prior to starting your own internet scuffing journey.

With web content scraping, a thief targets the content of a web site or data source then steals it. The thief can make a fake site that has the exact very same web content as the target website. By doing this, due to the fact that the sites compare so well, it can be tough for an identification burglary target to distinguish the fake site from the real one. There are numerous various techniques and modern technologies that can be made use of to develop a spider and extract data from the internet.

News And Web Content Marketing

As an example, if you have an interest in the financial markets, you can scratch for material that particularly relates to that sector. You can then accumulation the tales into a spreadsheet and also analyze their content for key words that make them more suitable to your certain company. For example, you can collect information from Stocks and also Exchange Commission filings to obtain an understanding of the relative health of different business.

AI and data scraping: websites scramble to defend their content - CyberNews.com

AI and data scraping: websites scramble to defend their content.

Posted: Thu, 10 Aug 2023 07:00:00 GMT [source]

We currently stated that internet scraping isn't always as straightforward as adhering to a detailed procedure. Here's a list of additional things to consider prior to scuffing an internet site. As a specific, when you check out a site through your internet browser, you send what's called an HTTP request. This is primarily the digital matching of knocking on the door, asking ahead in. As soon as your demand is approved, you can after that access that site as well as all the information on it.

The 10th Annual Negative Bot Record

Actions analysis-- Tracking the ways visitors connect with an internet site can expose unusual behavior patterns, such as a suspiciously aggressive rate of demands and also senseless surfing patterns. The filtering process starts with a granular examination of HTML headers. These can provide ideas regarding whether a site visitor is a human or bot, and also destructive or safe. Header signatures are compared against a frequently upgraded data source of over 10 million understood versions.